“Is that a Drone?”: Adventures with a Quadcopter

Posted in Photography on April 24th, 2014 at 00:04:30So, I briefly mentioned this in another post about YouTube, but I bought myself a Phantom FC40 Quadcopter for my birthday.

It’s an all-in-one easy-to-use out of the box flying platform. It comes with a camera, which has wifi support for remote operation (“FPV”). It requires no experience with flying any type of aircraft — it’s pretty much all automatic, and driving it is more like a video game similar to 온카 than anything else. It normally retails for $500; I picked it up for $430 during a sale at B&H. (Since then, they’ve been almost constantly sold out, so either supply is tiny or demand has picked up a lot.)

It is the most exciting toy I have ever bought. Flying it is super neat, and the videos that it takes are brilliant. For a long time I’ve considered that I’d like to get my pilot’s license, but I had never really had the ability to set aside the money it would take to do it. The Phantom is certainly no pilot’s license, but it still lets me see the neighborhood I live in in a different way, which is part of my intent.

I would like to do some things with mapping using the quad: doing super-local aerial imagery stitching. But so far, I’ve been having too much fun just flying the thing around.

The FC40 — which is the low end, basic model — doesn’t have any remote telemetry, so I can’t tell how far, fast, or high I’m going. The transmitter, in theory, goes to about 1000 feet, and remote video goes to about 300 feet. I don’t think I’ve hit the 1000′ limit, but I’m pretty sure I’ve passed the 300 foot limit.

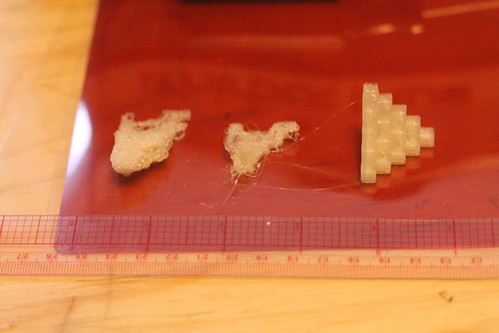

I need to get more practice on video editing: creating a compelling story is hard. (More on that in another post sometime.) But the hardware itself is terrific. It’s simple enough that even little kids can do it. The copter is resilient — even when I accidentally got turned around and flew the thing into a fence at full speed, it came out basically unscathed (minus a few replaceable prop guards). It’s stable — even in 10-15mph winds, it holds steady, and if the transmitter goes on the blink, it will return to its start position, for example.

I bought the Phantom — rather than doing something more “DIY”/open source — because the price for what you get was terrific. By the time you buy motors, electronics, and a camera, you’re already looking at $350-$400 of retail parts; getting all of that, in a pre-assembled ready to fly package was brilliant.

The support story on the Phantom is a bit weird; especially with the FC40, which is among the newer models, there’s a bit of an issue around replacement parts (I’ve lost one of my vibration dampers for the camera, and I can’t figure out how to get a new one, because no one sells that as a part for the FC40), but I think that’s likely to slowly go away as the FC40 becomes as widespread as the other Phantom models.

Of course, with any new technology, there are a set of FAQs that you should expect. The Quad is no exception.

Is that a drone?

It really depends on what you mean by the word drone. Many people who ask this think of drones like the US Government uses them — far-flung remote-operated bombing or long-term surveillance machines. This isn’t that. On the other hand, if what you mean is “Can this thing fly and take video, possibly without me seeing the person operating it?” the answer is yes — in that sense it is a drone. My usual answer is “I just call it my quadcopter.”

Is that a camera on the front?

Yep! It’s a 720p video camera which records to a memory card inside the camera. I can also hook it up to an iPhone or Android — but realistically, I don’t, because so far I’m not good enough to fly my quad without actually staring at it the whole time. It’s something I want to work on.

How high can it go?

Higher than I can see it. Under the most conservative rules regarding model aircraft — which is the most straightforward way to look at the Phantom — you can fly legally up to 400 feet, as long as it isn’t being done for commercial purposes and as long as it is more than 5 miles from an airport. I don’t have good data, but I believe I have flown higher than that when flying in the middle of nowhere, though not much — when I get that high, I can’t see the copter, so an errant gust of wind can mess things up if I’m not careful, so I generally try to stay pretty close.

How far can it go?

The transmitter is rated for up to 980 feet (I usually say ‘a quarter mile’), but some people have reported having it work up to twice that without any kind of booster. It runs off the same frequencies as some wifi routers, so it isn’t as long distance in a city as in the middle of nowhere.

Is that legal?

So long as I’m 5 miles from an airport, staying low, and not bothering people, the commonly held belief is that it falls under the FAA’s model airplane rules, and is legal to fly. Though the FAA makes contradictory statements all the time, so the “and not bothering people” becomes really key. 🙂

Do you go to MIT?

Nope.

Between this, and the cost ($500 is generally viewed as “a lot cheaper than I would have thought it would be”), this forms the majority of the common questions I get about flying the quad around Boston — and I *always* get questions. I’ve gone out and flown approximately 20 different occasions now, and I’ve never *not* gotten questions from *someone* 🙂