Lars Aronsson on the OSM list said:

The result from this is Steve’s current data model and the fact that the rest of us accept this as a viable solution. Those who don’t, because they know more of GIS, like Christopher Schmidt, are repelled by everything they find under the hood of OSM.

Part of my response:

I’m actually not repelled by everything. It’s simply a different choice than I would make. Specifically:

- OSM uses topology as its base storage. Topology is good for making graphs, which is important when you need to do routing. For this reason, (it seems to me) that OSM was built towards the goal of creating driving directions. Great goal for a project to have. However,

- Most geo-software uses Simple Features — not topology — for handling data. The result is very different — Simple Features are designed for making maps. If you’d like evidence, look at how the mapnik maps are built: the topology is turned into simple features, and stored in PostGIS. My MapServer demos just under a year ago worked the same way.

The difference to me is simple:

If I want to drawn an OSM feature on a map, I have to fetch a large number of pieces of data fromm the API individually, and combine them to create a geographic feature.

Example:

Way ID 4213747:

- 1 way.

- 21 segments.

- 22 nodes.

So, to visualize this one way, I have to make 44 fetches to the API.

Now, if I switch to a simple features model:

JSON Simple Feature output of same geometry

I’m given a geometry (“Line”), list of coordinates, and list of properties. (This is JSON output: you can also see it as html by adding ‘.html’ to the end, or as atom by adding ‘.atom’ to the end.)

“Line” can also be “Polygon”, or “Point”. (Or “MULTIPOLYGON”, etc., though FeatureServer doesn’t support those.)

This is one fetch. I can now draw the feature. I can also query for other features which have the same name, and get the information for those, too:

Attribute query on name

This shows me that there is also a feature, ID 4213746, which has the same name. I can draw all these features on a map with the output of one query.

In OSM, that would be 88. 88 queries to the API, just so I can display two features — not to mention the fact that at the moment, there’s no way to query attributes quickly.

If there was a strong reason for storing topology — that is, if OSM was really not about maps, and was instead about making driving directions — this could make sense. In fact, it may make sense: I may have a serious lack of understanding about how the project data is being used. However, I think that the most common usage of OSM is *making maps* —

in fact, Steve even backs me up on this, in his post:

OpenStreetMap is driven by this principle that we just want a fscking map.

Topology makes a graph, not a map. This is the reason why I’m in favor of a simple features-based data model: Features-based models are what you use for making maps. Topology is what you use for doing analysis.

The upshot of this? The tools to make topology out of simple features *already exists*: GRASS will do it. PostGIS + pgdijkstra will do it. Any application out there which needs topology knows how to get it, because mapping data is almost always distributed as something that isn’t topological. These are all technical problems: mapping back and forth is possible. The best way to do it is hard to determine, but the OSM project has no shortage of hard-working participants, and I’m sure that over time we will see easier to use UIs and editors for editing and creating data.

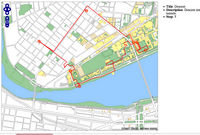

Went out for a late night walk tonight: though my path was a bit unclear, I think I made a decently accurate map:

Went out for a late night walk tonight: though my path was a bit unclear, I think I made a decently accurate map: